07/04/2023

07/04/2023

Mr. Mrityunjay Ojha

5 Mins to Read

Table of content

On March 15th, Google Search released its first core update of the year, a comprehensive core update for March 2023. Rollout could take up to two weeks, according to Google.

The March 2023 Core Update will target all content types in all regions and languages to promote or reward high-quality websites. According to Google, nothing in this (or any other) core update targets specific pages or websites.

Instead, this change is intended to improve how the system evaluates content overall. These changes will allow some pages that previously paid less to perform better in search results.

Some experts speculate that the update will cause most problems to the black hat SEO community, which uses tactics that go against Google’s policy to rank higher in search results.

How does the update impact marketers?

There isn’t much information about this core update. It may take several weeks to see the full impact as Google’s crawlers index and re-rank web pages.

Google will update the ranking update history page with more information, once the implementation is done.

Some online SEOs have reported changes with the update, such as declining traffic and weak keyword positioning. Others have reported increased traffic, but some say it’s too early to tell

Crucial things to keep in mind

Following are some key points to keep in mind:

Time is of the Essence: The effects of core algorithm updates are not felt immediately. It can take days or weeks for Google’s crawlers to index and re-rank the web.

Track the Numbers: Keep track of your site’s organic search traffic and keyword rankings. These metrics help you assess the updates’ impact and identify improvement areas.

Quality is King: These updates aim to provide better search results. This means consistently creating valuable content that meets the needs of your target audience. Pay attention to the details.

Remember The Fine Details: Technical aspects of a website, such as a website speed, mobile compatibility, and proper indexing, play a large role in determining overall rankings. Give them the attention they deserve.

What can SEO practitioners do?

Some changes from this core update may be temporary and will be reverted once the update is fully implemented. However, pages that are constantly changing may need to be adapted.

While it’s too early to determine the lasting impact of this update, marketers can monitor the site closely and follow her four-step action plan below.

Closely Monitor Your Site’s Metrics

Keep an eye on the performance. Do you see any changes in site visitors, sessions, time spent on site, keyword positioning, etc.?

You should monitor these numbers carefully. In particular, please be careful until the end of March, when the update will be completed.

Perform a Website or Content Audit

If you find a drop, you should run a content check. See which pages are most affected and what kind of searches are being performed.

Compare your content with your competitors. For example, content that ranks higher than your own in an exact match search may provide direct (rather than indirect) knowledge about the topic.

Focus on Quality, Valuable Content

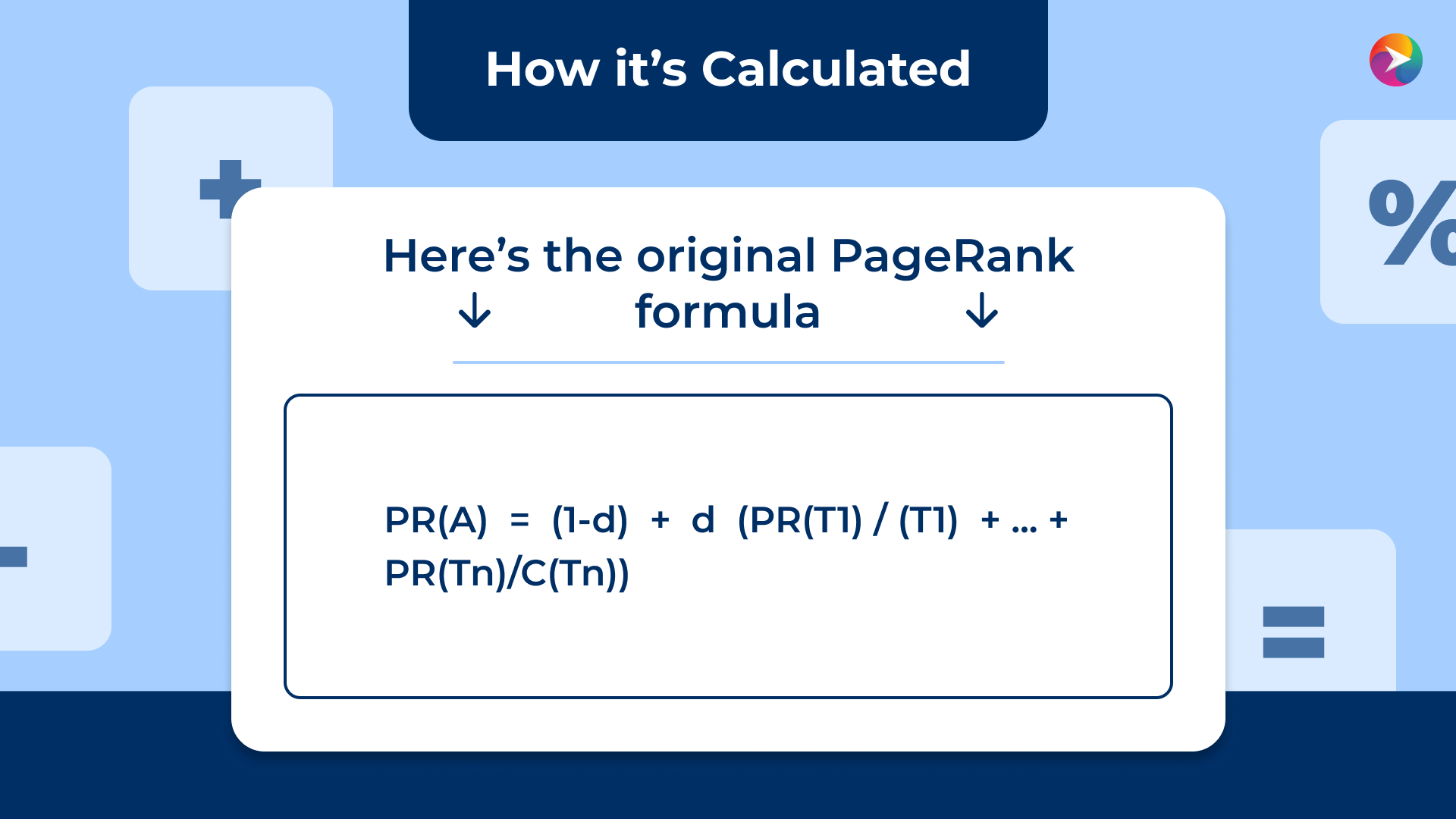

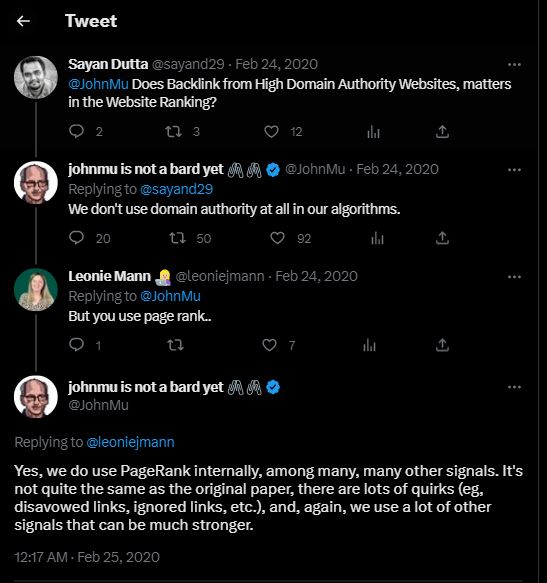

It’s no secret that Google values quality content. E-A-T (Expertise, Authority, Trustworthiness) has been important in ranking content on Google’s search engine result pages for years.

Optimize Technical SEO

When ranking, Google also considers the technical aspects of websites, such as Website structure, loading speed and mobile optimization.

Now is the ideal time to focus on and address these areas where user experience can be prioritized. A technical SEO checklist might include the following:

- Check the website loading speed

- Identify and fix broken links

- Find and fix crawl errors

- Make sure your website is mobile friendly

- Find and fix orphaned pages

- Add structured data

Final thoughts

If you’ve noticed a drop in traffic or rankings since Google’s latest algorithm update, don’t wait for things to improve on their own. Instead, build a technically sound website with quality content and a great user experience.

Mobile Apps

Mobile Apps Web Apps

Web Apps Blockchain

Blockchain Digital Marketing

Digital Marketing Others

Others